Antonio Terceiro: pristine-tar updates

Introduction

pristine-tar is a tool that is present in the workflow of a lot of Debian people. I adopted it last year after it has been orphaned by its creator Joey Hess. A little after that Tomasz Buchert joined me and we are now a functional two-person team.

pristine-tar goals are to import the content of a pristine upstream tarball into a VCS repository, and being able to later reconstruct that exact same tarball, bit by bit, based on the contents in the VCS, so we don t have to store a full copy of that tarball. This is done by storing a binary delta files which can be used to reconstruct the original tarball from a tarball produced with the contents of the VCS. Ultimately, we want to make sure that the tarball that is uploaded to Debian is exactly the same as the one that has been downloaded from upstream, without having to keep a full copy of it around if all of its contents is already extracted in the VCS anyway.

The current state of the art, and perspectives for the future

pristine-tar solves a wicked problem, because our ability to reconstruct the original tarball is affected by changes in the behavior of  pristine-tar 1.41: support for signatures

This release saw the addition of support for storage and retrieval of upstream signatures, contributed by Chris Lamb.

pristine-tar 1.42: optionally recompressing tarballs

I had this idea and wanted to try it out: most of our problems reproducing tarballs come from tarballs produced with old compressors, or from changes in compressor behavior, or from uncommon compression options being used. What if we could just recompress the tarballs before importing then? Yes, this kind of breaks the pristine bit of the whole business, but on the other hand, 1) the contents of the tarball are not affected, and 2) even if the initial tarball is not bit by bit the same that upstream release, at least future uploads of that same upstream version with Debian revisions can be regenerated just fine.

In some cases, as the case for the test tarball

pristine-tar 1.41: support for signatures

This release saw the addition of support for storage and retrieval of upstream signatures, contributed by Chris Lamb.

pristine-tar 1.42: optionally recompressing tarballs

I had this idea and wanted to try it out: most of our problems reproducing tarballs come from tarballs produced with old compressors, or from changes in compressor behavior, or from uncommon compression options being used. What if we could just recompress the tarballs before importing then? Yes, this kind of breaks the pristine bit of the whole business, but on the other hand, 1) the contents of the tarball are not affected, and 2) even if the initial tarball is not bit by bit the same that upstream release, at least future uploads of that same upstream version with Debian revisions can be regenerated just fine.

In some cases, as the case for the test tarball  In other cases, if the current heuristics can t produce a reasonably small delta, recompressing makes a huge difference. It s the case for

In other cases, if the current heuristics can t produce a reasonably small delta, recompressing makes a huge difference. It s the case for  Recompressing is not enabled by default, and can be enabled by passing the

Recompressing is not enabled by default, and can be enabled by passing the

tar and of all of the compression tools (gzip, bzip2, xz) and by what exact options were used when creating the original tarballs. Because of this, pristine-tar currently has a few embedded copies of old versions of compressors to be able to reconstruct tarballs produced by them, and also rely on a ever-evolving patch to tar that is been carried in Debian for a while.

So basically keeping pristine-tar working is a game of Whac-A-Mole. Joey provided a good summary of the situation when he orphaned pristine-tar.

Going forward, we may need to rely on other ways of ensuring integrity of upstream source code. That could take the form of signed git tags, signed uncompressed tarballs (so that the compression doesn t matter), or maybe even a different system for storing actual tarballs. Debian bug #871806 contains an interesting discussion on this topic.

Recent improvements

Even if keeping pristine-tar useful in the long term will be hard, too much of Debian work currently relies on it, so we can t just abandon it. Instead, we keep figuring out ways to improve. And I have good news: pristine-tar has recently received updates that improve the situation quite a bit.

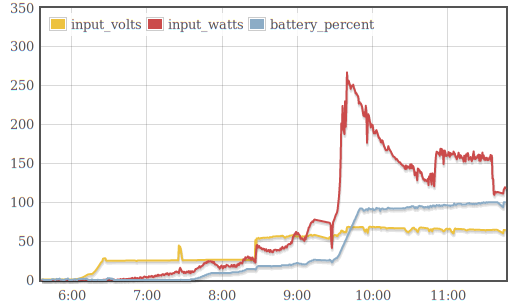

In order to be able to understand how better we are getting at it, I created a "visualization of the regression test suite results. With the help of data from there, let s look at the improvements made since pristine-tar 1.38, which was the version included in stretch.

pristine-tar 1.39: xdelta3 by default.

This was the first release made after the stretch release, and made xdelta3 the default delta generator for newly-imported tarballs. Existing tarballs with deltas produced by xdelta are still supported, this only affects new imports.

The support for having multiple delta generator was written by Tomasz, and was already there since 1.35, but we decided to only flip the switch after using xdelta3 was supported in a stable release.

pristine-tar 1.40: improved compression heuristics

pristine-tar uses a few heuristics to produce the smaller delta possible, and this includes trying different compression options. In the release Tomasz included a contribution by Lennart Sorensen to also try the --gnu, which gretly improved the support for rsyncable gzip compressed files. We can see an example of the type of improvement we got in the regression test suite data for delta sizes for faad2_2.6.1.orig.tar.gz:

pristine-tar 1.41: support for signatures

This release saw the addition of support for storage and retrieval of upstream signatures, contributed by Chris Lamb.

pristine-tar 1.42: optionally recompressing tarballs

I had this idea and wanted to try it out: most of our problems reproducing tarballs come from tarballs produced with old compressors, or from changes in compressor behavior, or from uncommon compression options being used. What if we could just recompress the tarballs before importing then? Yes, this kind of breaks the pristine bit of the whole business, but on the other hand, 1) the contents of the tarball are not affected, and 2) even if the initial tarball is not bit by bit the same that upstream release, at least future uploads of that same upstream version with Debian revisions can be regenerated just fine.

In some cases, as the case for the test tarball

pristine-tar 1.41: support for signatures

This release saw the addition of support for storage and retrieval of upstream signatures, contributed by Chris Lamb.

pristine-tar 1.42: optionally recompressing tarballs

I had this idea and wanted to try it out: most of our problems reproducing tarballs come from tarballs produced with old compressors, or from changes in compressor behavior, or from uncommon compression options being used. What if we could just recompress the tarballs before importing then? Yes, this kind of breaks the pristine bit of the whole business, but on the other hand, 1) the contents of the tarball are not affected, and 2) even if the initial tarball is not bit by bit the same that upstream release, at least future uploads of that same upstream version with Debian revisions can be regenerated just fine.

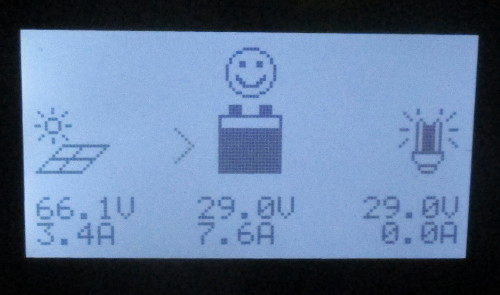

In some cases, as the case for the test tarball util-linux_2.30.1.orig.tar.xz, recompressing is what makes it possible to reproduce the tarball (and thus import it with pristine-tar) possible at all:

In other cases, if the current heuristics can t produce a reasonably small delta, recompressing makes a huge difference. It s the case for

In other cases, if the current heuristics can t produce a reasonably small delta, recompressing makes a huge difference. It s the case for mumble_1.1.8.orig.tar.gz:

Recompressing is not enabled by default, and can be enabled by passing the

Recompressing is not enabled by default, and can be enabled by passing the --recompress option. If you are using pristine-tar via a wrapper tool like gbp-buildpackage, you can use the $PRISTINE_TAR environment variable to set options that will affect any pristine-tar invocations.

Also, even if you enable recompression, pristine-tar will only try it if the delta generations fails completely, of if the delta produced from the original tarball is too large. You can control what too large means by using the --recompress-threshold-bytes and --recompress-threshold-percent options. See the pristine-tar(1) manual page for details.

Here is my monthly update covering what I have been doing in the free software world in August 2017 (

Here is my monthly update covering what I have been doing in the free software world in August 2017 ( Three years ago, I realized that

Three years ago, I realized that